Introduction

LM Arena is an innovative platform designed to help businesses, developers, and AI enthusiasts compare and evaluate different AI models side by side. Instead of being a tool for creating AI, LM Arena focuses on analysing models’ performance, accuracy, speed, and suitability for various tasks. It’s ideal for businesses, researchers, and anyone who wants to select the best AI model for their specific requirements without guessing or extensive testing.

Competitor Comparison

LM Arena competes with tools like Hugging Face Spaces, Papers With Code, EvalAI, OpenAI Playground, and PromptLayer.

| Tool | Strengths |

|---|---|

| LM Arena | Compare multiple AI models, performance benchmarking, user-friendly interface |

| Hugging Face Spaces | Large library of models, community contributions |

| Papers With Code | Research-driven, detailed metrics |

| EvalAI | Automated evaluation of AI models |

| OpenAI Playground | Quick testing of OpenAI models |

| PromptLayer | Track and manage prompts for AI testing |

Pricing & User Base

At the time of writing, LM Arena offers free access for basic usage, with premium features expected in future updates.

Primary Users: Businesses, AI researchers, data scientists, educators, and AI enthusiasts.

Difficulty Level

Ease of Use: Easy

LM Arena provides an intuitive interface, making it straightforward to compare AI models without technical expertise.

Use Case Example

Imagine you run a small e-commerce business and want to implement AI for customer support. You are unsure which model will provide the most accurate and natural responses.

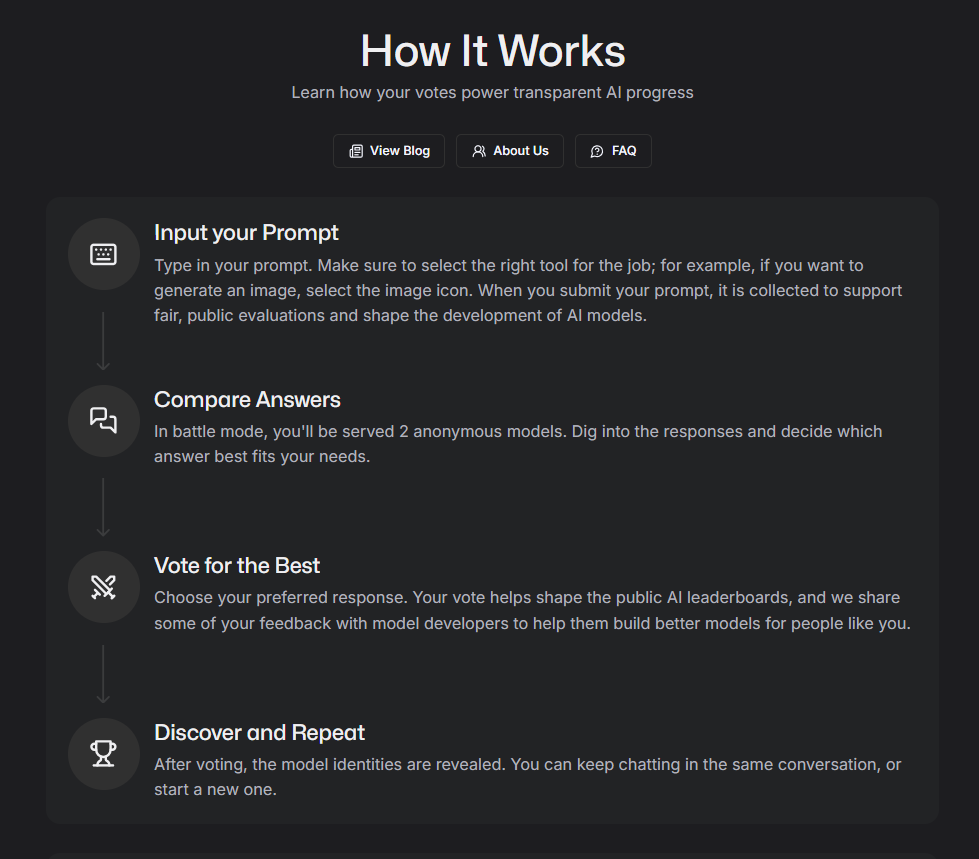

Open LM Arena and select AI models designed for conversational tasks.

Run the same customer inquiry prompts, like “What is your return policy?” or “Can I change my delivery address?” across all models.

Review performance metrics such as response accuracy, speed, and tone consistency.

Choose the model that best aligns with your business needs.

This approach removes the guesswork, ensures your AI assistant is reliable, and saves time and money.

Pros and Cons

Pros

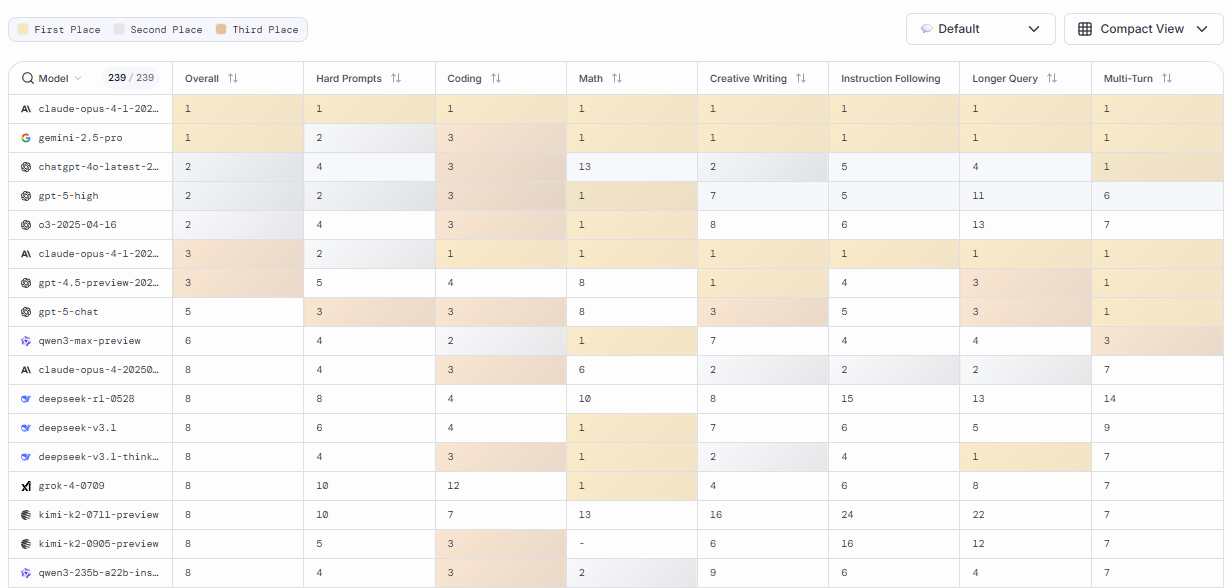

- Compare multiple AI models side by side

- Provides performance metrics and benchmarking

- Provides performance metrics and benchmarking

- Saves time and resources for AI selection

Cons

- Limited to evaluation; does not create AI models

- Some advanced analytics may require premium access in future

- Focused mainly on model comparison, not deployment

Integration & Compatibility

Web-based platform accessible from any modern browser

Compatible with AI models from popular frameworks like OpenAI, Hugging Face, and others

Allows exporting comparison results for reporting or internal use

Support & Resources

Detailed documentation and tutorials

Community forum for sharing insights and tips

Contact support for questions about model evaluation

If you want to explore how AI can accelerate your growth, consider joining a Nimbull AI Training Day or reach out for personalised AI Consulting services.